Hot Chips Heavy Hitter: IBM Tackles Generative AI With Two Processors

Announced this week at Hot Chips 2024, the new processors use a scalable I/O sub-system to slash energy consumption and data center footprint.

At the 2024 Hot Chips conference this week, IBM announced two new AI processors, the Telum II and Spyre Accelerator. According to the company, these processors are set to drive the next generation of IBM Z mainframe systems, specifically enhancing AI capabilities, including large language models (LLMs) and generative AI.

.jpg)

The new processors from IBM

IBM designed the processors to maintain the high security, availability, and performance levels for which IBM mainframes are known. Attending Hot Chips, All About Circuits heard firsthand from Chris Berry, a microprocessor designer at IBM, to learn about the new processors.

Architectural Innovations in Telum II

IBM has significantly improved the Telum II processor over its predecessor, with faster speeds, greater memory capacity, and new features.

“We designed Telum II so that the cores can offload AI operations to any of the other seven adjacent processor chips in the drawer,” Berry said. “It provides each core access to a much larger pool of AI compute, reducing the contention for the AI accelerators."

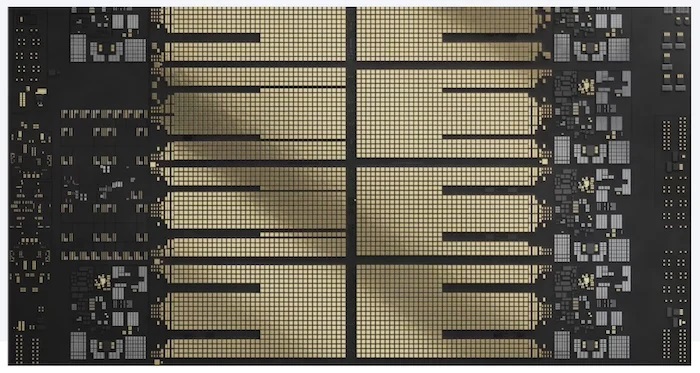

The processor leverages eight high-performance cores, each running at a fixed 5.5-GHz frequency, and features an integrated on-chip AI accelerator. This accelerator directly connects to the processor’s CISC instruction set to enable low-latency AI operations. Unlike traditional accelerators that rely on memory-mapped I/O, Telum II’s AI accelerator executes matrix multiplications and other AI primitives as native instructions, reducing overhead and improving throughput. The AI compute capacity of each accelerator in Telum II has quadrupled, reaching 24 TOPS per chip.

Tellum II processor

The processor also features a substantial increase in cache capacity, with each core having access to 36 MB of L2 cache, totaling 360 MB on-chip. The virtual L3 and L4 caches have grown 40% to 360 MB and 2.88 GB, respectively. These enhancements allow Telum II to handle large datasets more efficiently, further supporting its AI and transaction processing capabilities.

Tellum’s Data Processing Unit

One of the notable features of the Telum II processor is its integrated data processing unit (DPU).

In enterprise environments where IBM mainframes process billions of transactions daily, I/O operations efficiency is extremely important. For this reason, the DPU in Telum II is coherently connected to the processor's symmetric multiprocessing (SMP) fabric and equipped with its own L2 cache.

The DPU architecture includes four processing clusters, each with eight programmable microcontroller cores, totaling 32 cores. These cores are interconnected through a local coherency fabric, which maintains cache coherency across the DPU and integrates it with the main processor. This integration allows the DPU to manage custom I/O protocols directly on-chip.

“By putting the DPU on the processor side of the PCI interface and enabling coherent communication of the DPU with the main processors running the main enterprise workloads, we minimize the communication latency and improve performance and power efficiency,” Berry said. “This is a 70% power reduction for I/O management across the system."

Moreover, the DPU includes specialized hardware for cyclic redundancy check (CRC) acceleration and a dedicated data path for bulk data transfers so that the cache is not polluted with transient data.

Spyre Accelerator: Enhancing AI at Scale

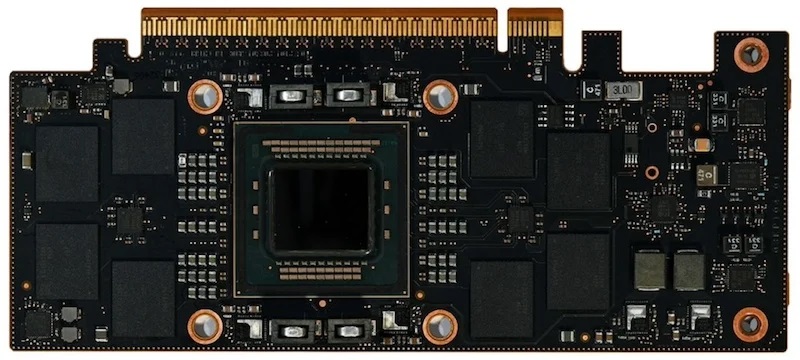

Complementing the Telum II processor is the IBM Spyre accelerator, a dedicated AI chip designed to scale AI capabilities beyond what is achievable on the main processor alone.

The Spyre accelerator comes mounted on a 75 W PCIe adapter and features 32 cores, each with 2 MB of scratchpad memory, totaling 64 MB on-chip. Unlike traditional caches, this scratchpad is optimized by a co-designed hardware-software framework for efficient data storage and management during AI computations.

Spyre accelerator

The Spyre accelerator supports large language models and other compute-intensive AI workloads. With up to 1 TB of memory across eight cards in a single I/O drawer, Spyre enables IBM Z systems to handle AI workloads that require substantial compute power and memory bandwidth. The accelerator's cores support int4, int8, fp8, and fp16 data types. In scenarios where multiple Spyre cards are used together, the system can scale up to a 1.6 TB/s memory bandwidth.

The Synergy Between Telum II and Spyre Accelerator

According to IBM, Telum II and Spyre were designed to work cohesively within a larger mainframe architecture in a way that optimizes AI workloads.

Telum II’s on-chip AI accelerator provides immediate, low-latency AI processing capabilities integrated within the main processor. In contrast, the Spyre accelerator offers additional, scalable AI compute power necessary for more complex, large-scale AI models.

IBM claims that the synergy between the two unlocks ensemble AI, where multiple AI models, including traditional models and LLMs, are used in tandem. For example, a smaller, energy-efficient model might handle most transactions, while more complex models are reserved for cases requiring higher confidence levels. This strategy improves accuracy and optimizes resource usage.

Driving the Next Generation of IBM Z

The Telum II and Spyre Accelerator offer a robust, integrated solution for the future of IBM’s mainframes.

“We're currently building a test system that will have 96 Spyre cards in it, which will have a total AI inference and compute of 30 peta-ops,” Berry concluded. “That's the scale of additional AI compute we're talking about adding to our next generation IBM Z."

.jpg)