Japan’s NTT-Docomo Uses Quantum Computing to Optimize Cell Networks

Japan’s leading telecommunications company has partnered with quantum computing company D-Wave to use D-Wave’s equipment for network operational improvement.

Japan’s leading telco company, NTT-Docomo, has demonstrated results from a pilot program to optimize network traffic using D-Wave's quantum computing systems. The quantum computing systems predict where phones will move about in the network.

.jpg)

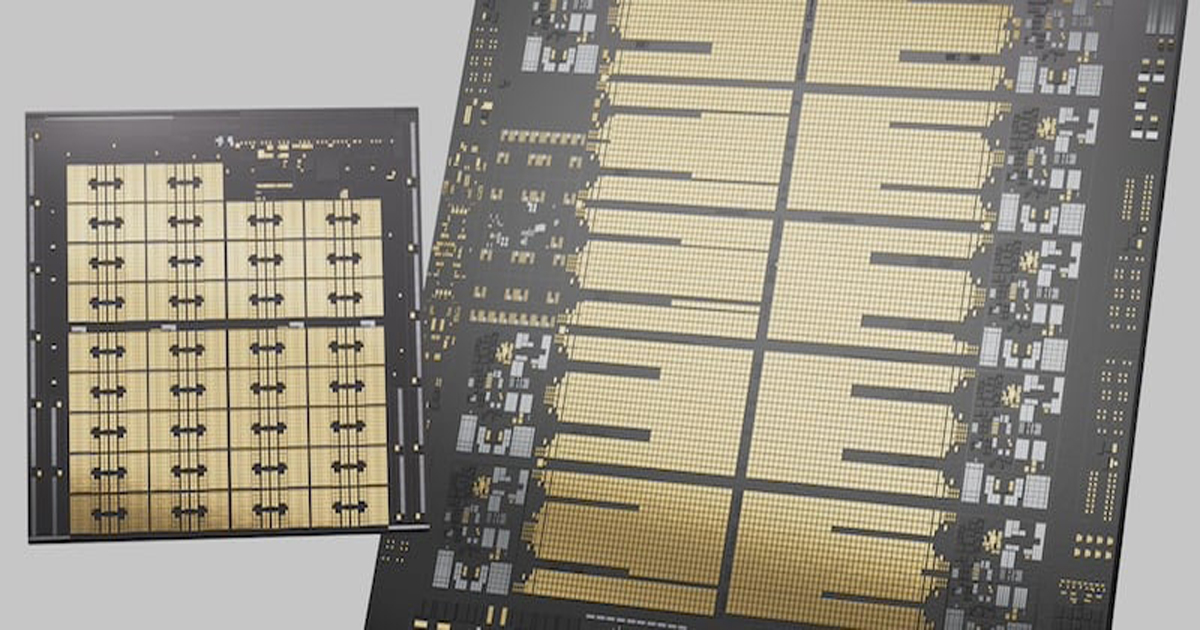

D-Wave quantum processor

By understanding phone travel, the network can reduce the number of paging signals sent by network equipment. This improves phone movement tracking and enables smoother tower-to-tower travel.

Telecom Companies Strive to Optimize Coverage

The pilot program analyzes massive amounts of historical mobile device movement data to predict which combination of base stations can best re-establish a connection to a device. Cell phone towers have a fixed capacity for data throughput. The system can be load-leveled with tower coverage overlap. However, it takes resources to manage the overlap and shift connections as the network nears capacity. The page signal is key to that overlap and shift management process.

.jpg)

Telco companies must reduce the required number of paging signals.

Telecom companies analyze cellular device movement to both plan out tower installations and to ensure adequate coverage. To do this, they must predict device movement throughout an installed network. With the predictive analysis, the towers that are unlikely to see a specific device will not be required to page it as often. The pilot program demonstrated that the network could reduce these pages by about 15% overall. Such an improvement can extend service to 20% more mobile devices during peak demand times.

Turning to Probabilistic Computing

The calculation typically took about 27 hours using conventional computing equipment. Adding D-Wave’s annealing quantum computing solutions to the hardware set allowed the calculation time to be brought down to just 40 seconds. The function used for the calculation is referred to as simulated annealing. Annealing uses probabilities to estimate the most likely solution to a complex function. Annealing functions mix uniformly random bits with the actual data. The function combines the random and real data to deliver a most likely average case answer.

Probabilistic math works well in analysis like this because human behavior is involved in the data set. Deterministic math will generally fail to deliver a reliable answer because it is impossible to predict exactly what an individual or set of individuals will do. Probabilistic functions instead give a “most likely” answer.

Defining Key Terms of the Pilot Program

The fundamental unit in D-Wave’s quantum computers is a quantum bit or qubit. A conventional digital bit can maintain a value of either one or zero, represented by a voltage or absence of voltage, respectively. A qubit can represent one, zero, or both at the same time. The quantum phenomenon of superposition enables the storage and manipulation of multiple states simultaneously. Quantum entanglement, or the connectedness of distinct particles, and quantum tunneling allow extremely fast manipulation of vast amounts of data. With quantum computing hardware, probabilistic functions like annealing can be solved significantly faster, as evidenced by NTT-Docomo's calculation time shrinking from 27 hours to 40 seconds.

.jpg)

Overview of quantum computing infrastructure.

Quantum annealers are a specialized form of quantum computers developed for probabilistic analysis. Generalized quantum computers use combinations of quantum gates, much like conventional digital computers use combinations of digital logic gates. Quantum annealers use a continuous data evolution approach. It uses the Schrödinger equation, which describes the quantum mechanics wave function. This capability is useful for real-world problem-solving involving large amounts of data in which an absolute answer does not exist, nor is the lack of an absolute answer a detriment.

Adding Quantum Capability to a Network Infrastructure

D-Wave supplied annealing-type quantum computers designed for combinatorial optimization problems such as NTT-Docomo’s predictive analysis problem. The quantum computer infrastructure was laid out to solve problems at multiple scales and optimize computing processes for each problem's scope.

One of the challenges with today’s quantum computers is the frequent requirement for maintenance. They are built with specialized cryogenically cooled hardware and cannot be used when warmed up for maintenance. That means that redundancy is vital so computing loads can still be covered while one piece of equipment is offline. Conventional telecom equipment is designed around hot maintenance—no need to power down. D-Wave and NTT-Docomo created a redundant system topology to support maintenance downtime without degrading overall system performance.

Out of the Laboratory and Into the Real World

Quantum computing has been “almost here” for several years now. Most systems, however, are still relegated to research and have not left the laboratory. D-Wave and NTT-Docomo’s pilot program shows that quantum computing is real and ready for use for some applications. The initial pilot covered three regions in Japan. Based on the results, NTT-Docomo plans to deploy the system in branch offices nationwide.